Honest, paywall-free news is rare. Please support our boldly independent journalism with a donation of any size.

Here’s a question worth asking about America’s seemingly endless global conflicts: if you kill somebody and there’s no one there (on our side anyway), is the United States still at war? That may prove to be the truly salient question when it comes to the future of America’s war on terror, which is now almost 18 years old and encompasses significant parts of the Greater Middle East and North Africa. Think of it, if you want, as the artificial intelligence, or AI, question.

It’s not, however, the question that Washington is obsessing over. Retired military officials, defense outlets, and pundits alike have instead been pontificating about what it means for the Department of Defense and key Trump officials to regularly insist that the country’s national security focus is shifting — from a struggle against insurgent groups like al-Qaeda and the Islamic State to the growing influence of what are termed “near-peer” enemies, a fancy phrase for China and Russia. Speculation about what this refocusing will look like has only grown in the wake of President Trump’s various tweets and statements declaring that America’s endless wars will be coming to a “glorious end” and how “now is the time to bring our troops back home.”

What’s been missing from this conversation is an answer to what should be a relatively easy question: Is the war on terror really being dumped to focus on great power competition?

After President Trump announced in December that he was pulling all 2,000 American troops out of Syria because “we have won against ISIS,” White House spokesperson Sarah Sanders clarified that this does not “signal the end of the global coalition or its campaign.” Breaking with Trump, the Pentagon soon clarified further: 1,000 of those troops will, in fact, remain in Syria, and the U.S.-led coalition will continue its airstrikes and coordinated attacks until the “enduring defeat of ISIS.” Since then, those airstrikes (and accompanying civilian casualties) have only risen.

Almost simultaneously, reports surfaced that Trump had ordered the Pentagon to pull 7,000 of the approximately 14,000 soldiers in Afghanistan out as part of a bigger plan to draw down the war there. After bipartisan outrage, the administration quickly walked this back, too.

Just weeks later, the United Nations Assistance Mission in Afghanistan reported that, in 2018, U.S.-caused civilian casualties from airstrikes had almost doubled from the previous year. It attributed the increased numbers — themselves probably an underestimate — to the U.S. conducting a greater number of strikes. In 2019 alone, we estimate that more than 100 civilians have been killed by American air attacks there, a phenomenon that shows no signs of decreasing.

Next, the Trump administration floated a rumor that the Pentagon would be drawing down troops across Africa, specifically in Somalia, to focus on that “next frontier of war” (Russia and China). With one stipulation: airstrikes and counterterrorism operations would, of course, continue. The only difference? Under the new plan, responsibility for those airstrikes against militants in Somalia would be shifted ever more to the CIA.

In fact, U.S. air strikes have already been radically on the rise in the Trump years. According to New America, there have been more of them in Somalia in the past six months than in any full calendar year of the campaign since it began. To add a further twist to Trump’s desire to “stop the endless wars,” Pentagon officials released their own news: that U.S. troops would, in fact, remain in Somalia for another seven years!

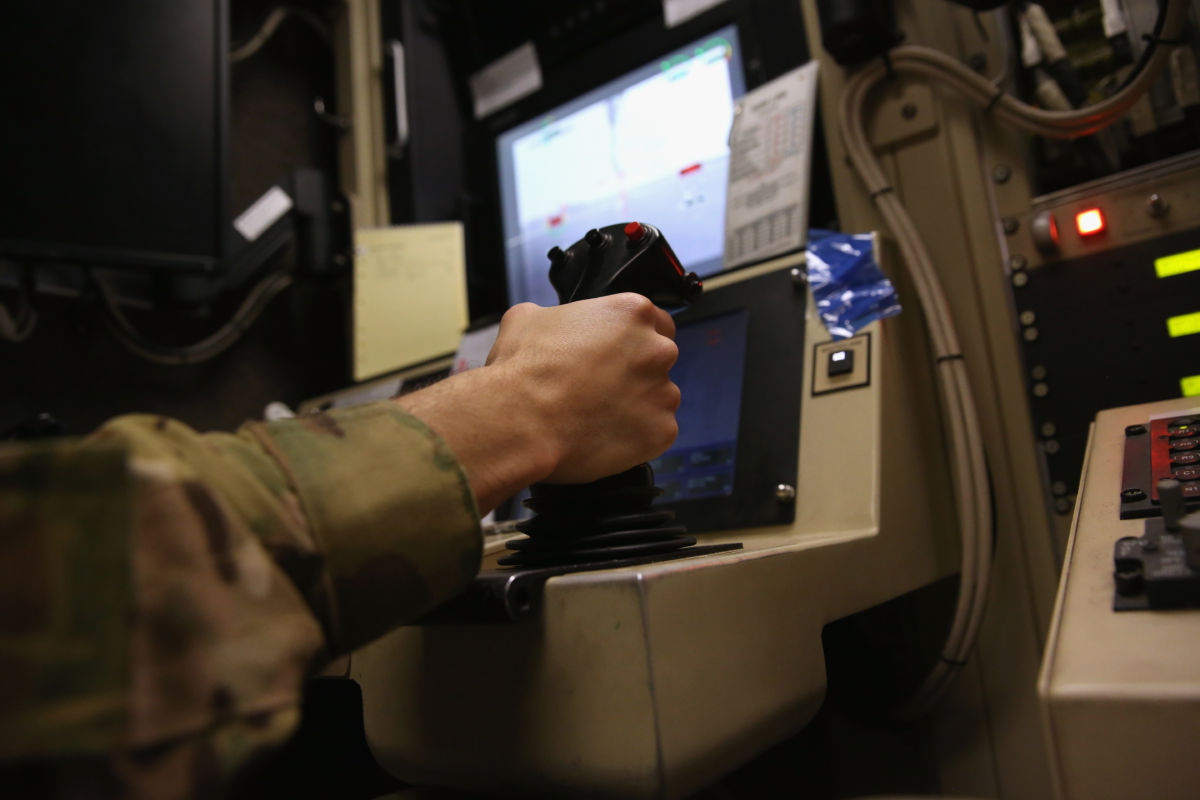

The drone strikes of remote warfare have already changed how Americans think about the wars being carried out in their name (if they think about them at all). On election day 2018, for instance, journalist Ezra Klein made the claim that “America isn’t at war.” Although he was subsequently dragged on Twitter for the statement, it points to a bigger problem: America’s wars have become so invisible that even people who theoretically might report on them seem to be forgetting about them.

Across the Greater Middle East and Africa, bombings, raids, missile attacks, and drone strikes have only risen, while Americans here at home are left with the impression that, as President Trump put it, “our boys, our young women, our men” are coming home and the endless wars are finally ending. In fact, all of the plans that purport to essentially draw down, if not end, the global war on terror have resulted so far in anything but that. And given the Trump administration’s new national security and artificial intelligence strategies, this is likely to be just the start of a process that will make it easier for Washington to enter a war that no one here really notices.

Pentagon officials recently made it clear that they want to expand the use of artificial intelligence in warfare in order to maintain America’s “strategic position and prevail on future battlefields.” Unfortunately, it’s not just future battlefields that the public needs to worry about. The U.S. military has already begun making its existing weapons systems more autonomous. This process is only likely to accelerate (in a largely undetectable fashion) thanks to the lack of transparency surrounding the development and application of AI, as well as the fact that private companies, with no commitment to public accountability, will be deeply involved in creating the technology.

Military Tech: The Ultimate Black Box

In popular culture, conversations about the dangers of advanced military technology tend to revolve around killer robots and other dystopian scenarios straight out of movies like The Terminator series, iRobot, and more recently Netflix’s Black Mirror. For those already in Washington’s foreign policy “blob,” however, discussions are more focused on just one terrifying possibility: that America’s enemies will harness this technology first. Though no one in Washington is likely to deny that killer robots could pose a genuine global threat, the focus on them and especially on what the Chinese and Russians might do with them ignores all the intermediate technologies already being operationalized that are likely to lead the world into the eye of the storm.

In fact, the Pentagon’s Defense Innovation Board points out the obvious — that “the impact of AI and machine learning (ML) will be felt in every corner of the Department’s operations.” Indeed, the military is already using artificial intelligence on the battlefield, most notoriously through “Project Maven.” Since early last year, Maven has already been deployed to a half-dozen locations in the Middle East and Africa. It employs artificial intelligence to comb through drone surveillance footage and select targets for future drone strikes in what is advertised as a faster, easier, more efficient manner.

Maven quickly gained notoriety because the employees of Google, which the Department of Defense had partnered with to produce it, went public with a petition expressing their outrage over the company’s decision to be in the business of war. Many of its top AI researchers, in particular, are worried that the contract with the Pentagon would only prove to be a first step in the development and use of such nascent technologies in advanced weapons systems. That petition was signed by almost 4,000 Google employees and ultimately resulted in the company (mostly) not renewing the contract.

That, however, hasn’t stopped other companies from competing for roles in Project Maven. In March 2019, in fact, a startup founded by Palmer Luckey, a 26-year-old ardent supporter of Donald Trump, became the latest tech company to quietly win a Pentagon contract as part of the project.

The concerns of those Google employees are made all the more urgent by the inherent limitations of complex AI systems, which leave them effectively immune to human oversight. Image recognition systems like Maven make mistakes. They can misidentify images in ways that baffle even their programmers. They are also potentially vulnerable to adversarial attacks by hackers capable of manipulating images in ways the humans receiving the AI system’s output are unlikely to detect.

In reality, complex machine learning still remains a remarkably opaque process even for AI experts. In 2017, for instance, a study by JASON researchers — an independent group of scientific advisers to the federal government (whose contract was reportedly recently terminated) — found testing to ensure that AI systems behave in predictable ways in all scenarios may not currently be feasible. They cautioned about the potential consequences, when it comes to accountability, if such systems are nonetheless incorporated into lethal weapons.

Such warnings have not, however, stopped the Pentagon from moving full steam ahead in developing and operationalizing artificial intelligence systems, while the AI arms race heats up around the world. As AI is increasingly integrated into the Pentagon’s everyday operations, the lack of safeguards will only become more glaring.

New tech companies large and small are already winning military contracts that previously went only to old guard contractors like IBM and Oracle. For instance, Palantir Technologies, a Silicon Valley software company founded by billionaire investor (and sometimes adviser to President Trump) Peter Thiel, recently beat out Raytheon for a large Army intelligence contract, making it the first Silicon Valley company to win a major one from the Pentagon. The intelligence community has also been integrating AI into its operations for years, thanks in large part to a 2013 cloud-computing contract with Amazon. Very little has, however, been made public about how the new technologies are being used by the CIA and other intelligence agencies.

Risking Forever War

Perhaps more dangerous than AI’s technological vulnerabilities is the detrimental impact it could have on the accountability of a Washington now eternally at war. The secrecy surrounding the military and national security uses of AI is already profound and growing. Aside from already vastly overclassifying information of all sorts, especially as it relates to artificial intelligence, the use of private companies to do much AI developmental work for the country’s national security agencies will only add an additional layer of secrecy. After all, private government contractors are not subject to the same transparency requirements as government agencies. They are not, for example, subject to the Freedom of Information Act.

Of the 5,000 pages documenting Google’s work on Project Maven, for instance, the Pentagon refused to release a single page, claiming that every last one of them constituted “critical infrastructure information.” If federal agencies are going to claim that all information on AI is too sensitive to be released to the public, then we are entering a new age of government secrecy that will surpass what we’ve already seen.

This is increasingly true abroad as well. The Pentagon, for instance, brought Project Maven to the battlefield in Iraq and Syria in 2018. Shortly thereafter, the U.S. military suddenly stopped releasing any information about airstrikes in those countries, leaving the public here largely ignorant about the surge in civilian casualties that followed. What role, if any, did artificial intelligence play in those killings? Who’s at fault if a computer misidentifies a target (and no one ever knows)? The national security state is clearly not interested in providing answers to such questions.

The new AI technologies will also weaken accountability by distancing policy makers and even the military itself from the consequences of American decisions and actions abroad. Military officials continue to tout AI’s ability to make lethal force ever more precise and efficient, but such blind faith in a flashy new technology, still in the early stages of development, is guaranteed to lead in ever more harmful directions. And when the mistakes begin to happen, when the nightmares commence, don’t hold the computers responsible.

Tech companies, for their part, have made it clear that they will accept no responsibility for how their AI systems are used by the Pentagon and the intelligence agencies. At the 2018 Aspen Security Forum, Teresa Carlson, an Amazon Web Services vice president, told the audience that the company hasn’t “drawn any red lines” regarding the government’s use of its technology and that they are “unwavering” in their support for the U.S. law enforcement, defense, and intelligence community. Carlson went on to admit that Amazon often doesn’t “know everything they’re actually utilizing the tool for,” but insisted that the government should have the most “innovative and cutting-edge tools” available so that it isn’t bested by its adversaries.

In a letter written to protest their company’s contract to provide AI augmented-reality headsets to the Army, Microsoft employees distilled the essence of this accountability problem: “It will be deployed on the battlefield, and works by turning warfare into a simulated ‘video game,’ further distancing soldiers from the grim stakes of war and the reality of bloodshed.”

In the end, placing AI systems between military commanders and the battlefield may actually make violence more, not less, prevalent, as has been the case with the drone program. The more Washington relies on technology to make combat decisions for military officials, while keeping American soldiers away from its far-flung combat zones (and so American casualties low), the more it is likely to reduce the traditional costs of war in terms of both (non-American) lives and accountability — in other words, the more it may reduce the restraints, political and otherwise, on the country’s actions abroad. Lack of political accountability, after all, is a major reason that American wars are now, to use a recent Pentagon term, “infinite.”

Politicians and experts are increasingly focused on the pivot to great-power competition, as well as on the best plans for winding down American war-on-terror conflicts and bringing the troops home. It’s an admirable goal, but in an increasingly AI-powered world, what if it actually means a future ramping-up of those very wars? After all, as long as bombs are still falling and people are still dying in other countries, our wars aren’t “over” — they’re just easier for Americans to ignore.

In the pursuit of technologies that theoretically are meant to keep the country safer, it’s important to ensure that the national security state is not simply making it easier to wage endless wars by hiding them ever more from public view and transferring the responsibility for fighting them to unaccountable machines. What President Trump is actually proposing simply removes the most visible aspect — the troops — while continuing the bombing of eight countries and secret counterterrorism operations across almost half the globe.

A terrifying moment. We appeal for your support.

In the last weeks, we have witnessed an authoritarian assault on communities in Minnesota and across the nation.

The need for truthful, grassroots reporting is urgent at this cataclysmic historical moment. Yet, Trump-aligned billionaires and other allies have taken over many legacy media outlets — the culmination of a decades-long campaign to place control of the narrative into the hands of the political right.

We refuse to let Trump’s blatant propaganda machine go unchecked. Untethered to corporate ownership or advertisers, Truthout remains fearless in our reporting and our determination to use journalism as a tool for justice.

But we need your help just to fund our basic expenses. Over 80 percent of Truthout’s funding comes from small individual donations from our community of readers, and over a third of our total budget is supported by recurring monthly donors.

Truthout has launched a fundraiser to add 500 new monthly donors in the next 9 days. Whether you can make a small monthly donation or a larger one-time gift, Truthout only works with your support.