Another week, another set of reminders that, while Facebook likes to paint itself as an “optimistic” company that’s simply out to help users and connect the world, the reality is very different. This past week, those reminders include a collection of newly released documents suggesting that the company adopted a host of features and policies even though it knew those choices would harm users and undermine innovation.

This month, a member of the United Kingdom’s Parliament published a trove of internal documents from Facebook, obtained as part of a lawsuit by a firm called Six4Three. The emails, memos, and slides shed new light on Facebook’s private behavior before, during, and after the events leading to the Cambridge Analytica scandal.

Here are some key points from the roughly 250 pages of documents.

Facebook Uses New Android Update to Pry Into Your Private Life in Ever-More Terrifying Ways

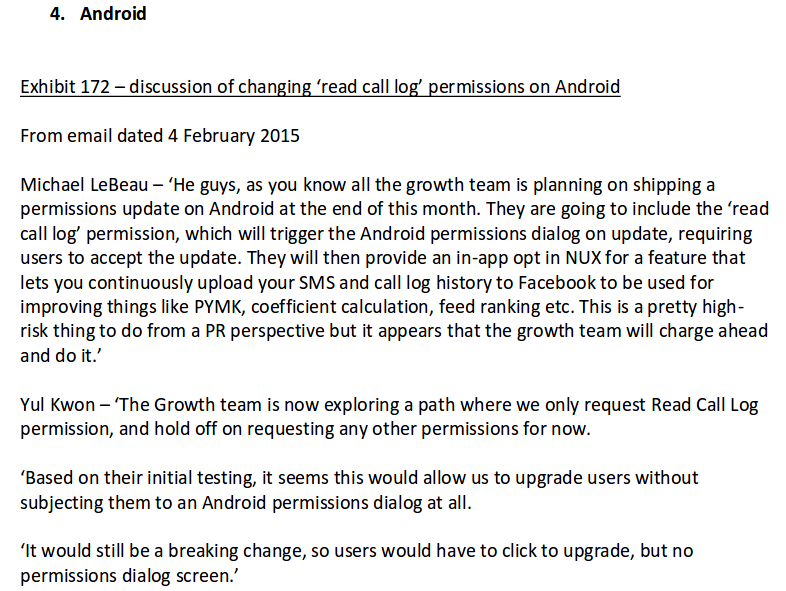

The documents include some of the internal discussion that led to Facebook Messenger’s sneaky logging of Android users’ phone call and text message histories. When a user discovered what Messenger was doing this past spring, it caused public outrage right on the heels of the Cambridge Analytica news. Facebook responded with a “fact check” press release insisting that Messenger had never collected such data without clear user permission.

In newly revealed documents from 2015, however, Facebook employees discuss plans to coerce users into upgrading to a new, more privacy-invasive version of Messenger “without subjecting them to an Android permissions dialog at all,” despite knowing that this kind of misrepresentation of the app’s capabilities was “a pretty high-risk thing to do from a PR perspective.”

This kind of disregard for user consent around phone number and contact information recalls earlier research and investigation exposing Facebook’s misuse of users’ two-factor authentication phone numbers for targeted advertising. Just as disturbing is the mention of using call and text message history to inform the notoriously uncanny PYMK, or People You May Know, feature for suggesting friends.

“I Think We Leak Info to Developers”

A central theme of the documents is how Facebook chose to let other developers use its user data. They suggest that Mark Zuckerberg recognized early on that access to Facebook’s data was extremely valuable to other companies, and that Facebook leadership were determined to leverage that value.

A little context: in 2010, Facebook launched version 1.0 of the Graph API, an extremely powerful — and permissive — set of tools that third-party developers could use to access data about users of their apps and their friends.

Dozens of emails show how the company debated monetizing access to that data. Company executives proposed several different schemes, from charging certain developers for access per user to requiring that apps “[Facebook] doesn’t want to share data with” spend a certain amount of money per year on Facebook’s ad platform or lose access to their data.

The needs of users themselves were a lesser concern. At one point, in a November 2012 email, an employee mentioned the “liability” risk of giving developers such open access to such powerful information.

Zuckerberg replied:

Of course, two years later, that “leak” is exactly what happened: a shady “survey” app was able to gain access to data on 50 million people, which it then sold to Cambridge Analytica.

“Whitelists” and Access to User Data

In 2015, partly in response to concerns about privacy, Facebook moved to the more restrictive Graph API version 2.0. This version made it more difficult to acquire data about a user’s friends.

However, the documents suggest that certain companies were “whitelisted” and continued to receive privileged access to user data after the API change — without notice to users or transparent criteria for which companies should be whitelisted or not.

Companies that were granted “whitelist” access to enhanced friends data after the API change included Netflix, AirBnB, Lyft, and Bumble, plus the dating service Badoo and its spin-off Hot or Not.

The vast majority of smaller apps, as well as larger companies such as Ticketmaster, were denied access.

User Data as Anticompetitive Lever

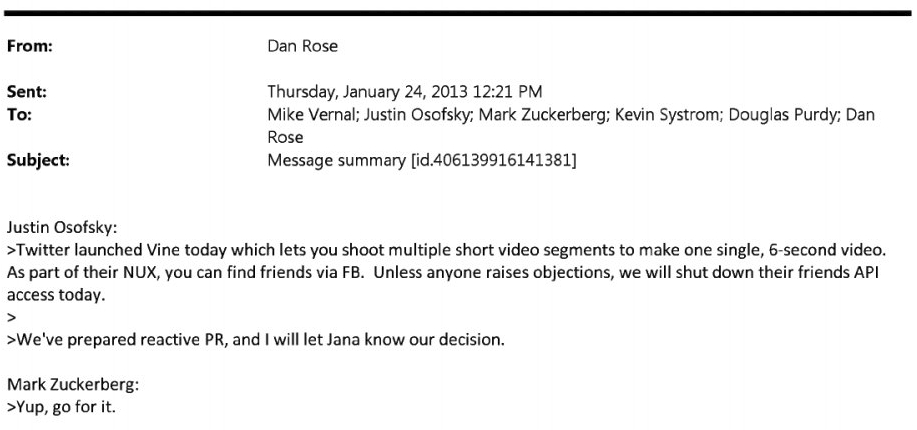

Both before and after Facebook’s API changes, the documents indicate that the company deliberately granted or withheld access to data to undermine its competitors. In an email conversation from January 2013, one employee announced the launch of Twitter’s Vine app, which used Facebook’s Friends API. The employee proposed they “shut down” Vine’s access. Mark Zuckerberg’s response?

“Yup, go for it.”

“Reciprocity”

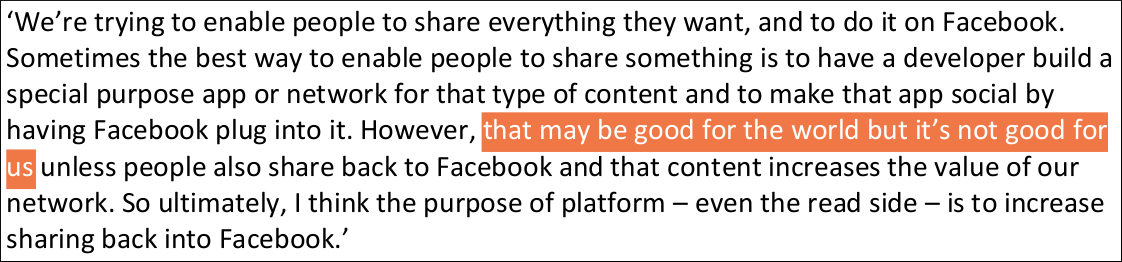

A significant portion of the internal emails mention Facebook enforcing “data reciprocity”: that is, requiring apps that used data from Facebook to allow their users to share all of that data back to Facebook. This is ironic, given Facebook’s staunch refusal to grant reciprocal access to users’ own contacts lists after using Gmail’s contact-export feature to fuel its early growth.

In an email dated November 19, 2012, Zuckerberg outlined the company’s thinking:

Emphasis ours.

It’s no surprise that a company would prioritize what’s good for it and its profit, but it is a problem when Facebook tramples user rights and innovation to get there. And while Facebook demanded reciprocity from its developers, it withheld access from its competitors.

False User Security to Scope Out Competitors

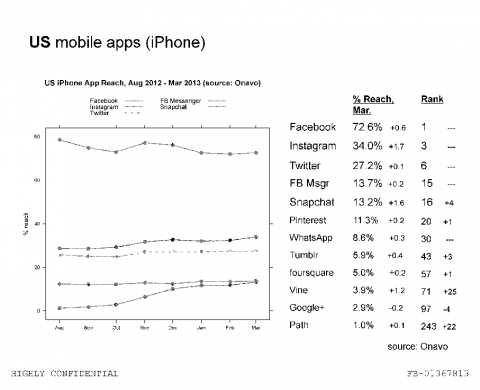

Facebook acquired Onavo Protect, a “secure” VPN app, in fall 2013. The app was marketed as a way for users to protect their web activity from prying eyes, but it appears that Facebook used it to collect data about all the apps on a user’s phone and immediately began mining that data to gain a competitive edge. Newly released slides suggest Facebook used Onavo to measure the reach of competing social apps including Twitter, Vine, and Path, as well as measuring its penetration in emerging markets such as India.

In August, Apple finally banned Onavo from its app store for collecting such data in violation of its Terms of Service. These documents suggest that Facebook was collecting app data, and using it to inform strategic decisions, from the very start.

Everything But Literally Selling Your Data

In response to the documents, several Facebook press statements as well as Mark Zuckerberg’s own letter on Facebook defend the company with the refrain, “We’ve never sold anyone’s data.”

That defense fails, because it doesn’t address the core issues. Sure, Facebook does not sell user data directly to advertisers. It doesn’t have to. Facebook has tried to exchange access to users and their information in other ways. In another striking example from the documents, Facebook appeared to offer Tinder privileged access during the API transition in return for use of Tinder’s trademarked term “Moments.” And, of course, Facebook keeps the lights on by selling access to specific users’ attention in the form of targeted advertising spots.

No matter how Zuckerberg slices it, your data is at the center of Facebook’s business. Based on these documents, it seems that Facebook sucked up as much data as possible through “reciprocity” agreements with other apps, and shared it with insufficient regard to consequences for users. Then, after rolling back its permissive data-sharing APIs, the company apparently used privileged access to user data either as a lever to get what it wanted from other companies or as a weapon against its competitors. You are, and always have been, Facebook’s most valuable product.

Speaking against the authoritarian crackdown

In the midst of a nationwide attack on civil liberties, Truthout urgently needs your help.

Journalism is a critical tool in the fight against Trump and his extremist agenda. The right wing knows this — that’s why they’ve taken over many legacy media publications.

But we won’t let truth be replaced by propaganda. As the Trump administration works to silence dissent, please support nonprofit independent journalism. Truthout is almost entirely funded by individual giving, so a one-time or monthly donation goes a long way. Click below to sustain our work.